Little Boxes

In my last article I introduced ginprov, an improvisational web server that makes up content as it goes along. Until my free credits run out, I’ve had fun hosting a live instance of ginprov on ginprov.com. Every so often I check to see what users have created.

Early on, I marveled at a diversity of sites such as a-dating-site-for-gnomes, upma-is-bad, and calculus-with-ducks. Ginprov (or who am I kidding, Gemini) rendered perfectly constructed web pages every time. As more sites were created, however, I began to notice something about the ginprov output. Take a look:

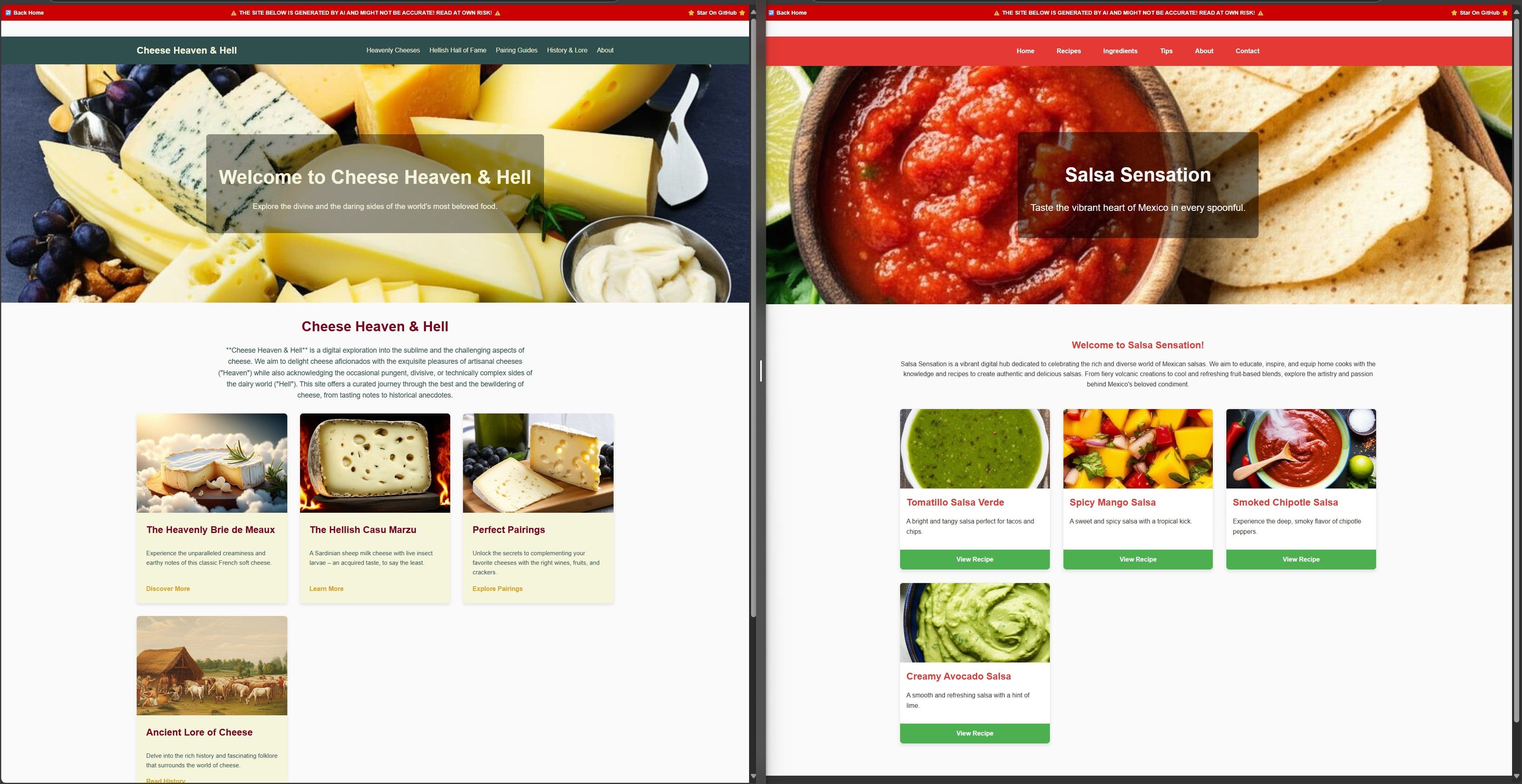

Despite their varying subject matter, the social cards together formed a uniform appearance. The sites themselves were highly similar as well: they shared similar layouts, techniques and colors. A “hero plus cards” layout was especially common. Do you prefer cheese or salsa?

Some were different — this wasn’t a template, this was simply the natural way Gemini responded to my prompt, and the small change in input between sites was not sufficient to elicit a dramatically different response. Ironically, proposal-to-ban-ai-fluff was one of the less common layouts:

A Feature, Not A Bug

So what is going on here? The way ginprov works is by asking the LLM to generate a site outline and style guide for the slug, then for each page and image the LLM is provided with that guide and the page URL and asked to render the content. In this approach, the only variance in input between sites is the initial slug, which is limited to 50 characters. Unlike a function like, for example, SHA256, designed so the entire output changes when a single bit is flipped, the LLM function (and LLMs are just complex functions) is much more stable. Fifty characters do not contain enough entropy to elicity a dramatically different response.

For an LLM this is usually a feature, not a bug. This makes their behavior with templatized prompts generally predictable and useful. However, will it also lead to LLM output being inevitably more formulaic and less creative than the output of a human?

Implementing A Drive for Originality

Humans are often compelled by a desire to be original. In creative fields we are discouraged and ridiculed, or even harassed and sued, for copying others. It’s hard to instill this same drive in an LLM:

- The LLM cannot remember past output, so it is unaware when its cheese site looks like its salsa site.

- The same LLM with the same training data is reused by millions of users, so there is less variety in its training compared to a human.

- The LLM as a function needs sufficient change in the input to drive significant change in the output. It is not designed to have an avalance effect like SHA256. Maybe the temperature parameter could help here, although I was not able to see much of a difference in the HTML output even at maximum temperature.

I think if I were to try to add more creativity to ginprov, I would probably try to stuff previously-rendered sites in the context, put the desire for originality in the prompt, and also I would inject a few hundred random tokens into the context. That last bit, I don’t know, but it’s worth a try. Maybe that could achieve some higher level of originality.

Fundamentally, though, I think LLMs are not set up as sources of creativity. Whether this will limit them in real-world applications remains to be seen.

Does This Make Sense?

AI is not my day-job. If you disagree with this take, please let me know on X.